This month I wish to spend a bit of time delving into the zIIP processor(s) that, hopefully, you all have available to use “for free”. Naturally, they cost a few thousand bucks a piece, but their usage is not in the rolling four-hour average and so basically free. Of course, if you have a different price model where CPU usage is all-inclusive then the use of these handy little beasts is moot!

What Is It?

They were first introduced in 2006 with the IBM System z9 processor complex. Their full name is System z Integrated Information Processor normally shortened to “zIIP”. They followed on and took over from the earlier zAAP, that was used for Java, and the IFL, which was for Linux and z/VM. Originally, they were just for Db2 workloads but nowadays quite a lot of non-Db2 work is zIIP eligible.

Eligible?

Yep, the wording is important! The fact that some function or code etc. is able to be run on a zIIP does not mean it *will* run on a zIIP. They are, after all, processors and when they are all busy, your workload will just trundle on by using the rest of the normal CPs (Central Processors) you have.

How Many?

It started out nice and easy… You could not have more zIIPs than you have CPs in your plex. So a 1:1 ratio. Then along came the zEC12 and it changed the ratio to be not more than 2:1 Nowadays, with the z16, IBM have thrown in the towel and announced there is no limit anymore!

When They Arrived

The first Db2 to exploit the zIIP was the “big change” version DB2 V8 when everything went UNICODE and long column on us all!

What have They Done for Us?

From the get go, any TCP/IP based remote accessing SQL was eligible for offload to zIIP. This was a very very good thing indeed and saved people mega-bucks. Parallel query child processes under a dependent enclave SRB, or independent enclave SRB if coming from TCP/IP, also got zIIP support and some utility processes, (Index build for LOAD, REORG and REBUILD INDEX, a portion of index build under a dependent enclave SRB and also a portion of sorting).

Sorry, SRB What?

You might have noticed a TLA (Three Letter Abbreviation) “SRB” occurring a lot in that text! So, what is an SRB and why is it so important? On mainframes, all work is run under two kinds of control blocks: Task and service request blocks. Normally user programs, and system programs, use a Task Control Block, (the TCB that we all know and love) and all run on normal CPs not zIIPs! The Service Request Block (SRB) however, is for system service routines. They are initiated by a TCB to do special stuff and to start them it is called “scheduling an SRB”. To do this, your program must be running in a higher authorized state called “supervisor state”. SRBs run parallel to the TCB task that scheduled them and they cannot own storage but can use the storage of the TCB. Only these SRBs are eligible to be offloaded to a zIIP.

And Then?

Well, when IBM brought out the z13 they merged the zAAP support onto the zIIP and since then, the general direction has been: If a task is an SRB then it *can* be made zIIP eligible. This has meant that there has been a gradual increase in Vendor take-on and IBM usage for these “helping hands”.

What about Db2 Usage?

In DB2 V9 they announced the actual, up until now hidden, limits of use. For TCP/IP remote SQL 60% offload, for Parallel queries 80% offload, Utilities up to 100% offload and, brand new, XML also up to 100% offload!

DB2 10

RUNSTATS were added, but *not* the distributed statistics and inline statistics parts, and Db2 buffer pools got 100% offload for prefetch and deferred write processing.

Db2 11

Not only did the B go lower case, but RUNSTATS got column group distribution statistic processing, and System Agent processing got up to 100% offload when running under enclave SRBs but not p-lock negotiation. This included page set castout, log read, log write, pseudo index-delete and XML multi version document cleanout.

It was also here, when they created the zIIP “needs help” function when a delay occurs. This is controlled by the z/OS parameter IIPHONORPRIORITY YES/NO setting. YES is the default and tells a stalled zIIP to shunt the work to a CP. That might, or might not, be a good idea depending on your cost or time SLAs.

Db2 12

This brought RESTful support at 60% offload, Parallel went up to 100% offload and RUNSTATS also went to 100% offload.

Db2 13

All Db2 SQL AI functions went straight to 100% offload and the COPY utility got a 100% offload but only in the COPYR phase.

COPY now in Db2 13 FL507

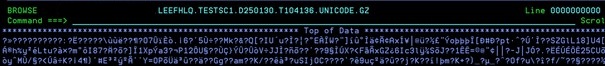

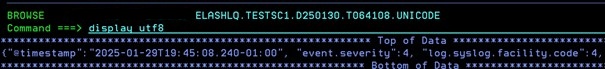

I recently kicked my little test Db2 up to Db2 13 FL507 and then waited a couple of days to see the zIIP usage that COPY just got. We were informed it was just in the COPYR subphase of the Utility. I use the SYSUTILITIES table to track everything, so I wrote a little SQL that lists out all the Utilities, Counts, CPU, zIIP and Elapsed.

Here’s the SQL splitting with/without zIIP usage:

SELECT SUBSTR(NAME , 1 , 18) AS UTILITY

,COUNT(*) AS COUNT

,(SUM(CPUTIME) * 1.000) / 1000000 AS CPU

,(SUM(ZIIPTIME) * 1.000) / 1000000 AS ZIIP

,((SUM(CPUTIME) + SUM(ZIIPTIME)) * 1.000) / 1000000 AS TOTAL

,(SUM(ELAPSEDTIME) * 1.000) / 1000000 AS ELAPSED

FROM SYSIBM.SYSUTILITIES

WHERE ZIIPTIME > 0

GROUP BY NAME

UNION ALL

SELECT SUBSTR(NAME , 1 , 18) AS UTILITY

,COUNT(*) AS COUNT

,(SUM(CPUTIME) * 1.000) / 1000000 AS CPU

,(SUM(ZIIPTIME) * 1.000) / 1000000 AS ZIIP

,((SUM(CPUTIME) + SUM(ZIIPTIME)) * 1.000) / 1000000 AS TOTAL

,(SUM(ELAPSEDTIME) * 1.000) / 1000000 AS ELAPSED

FROM SYSIBM.SYSUTILITIES

WHERE ZIIPTIME = 0

GROUP BY NAME

ORDER BY 1 , 2

FOR FETCH ONLY

WITH UR

; Here is my output:

---------+------------+----------+--------+----------+-----------+-

UTILITY COUNT CPU ZIIP TOTAL ELAPSED

---------+------------+----------+--------+----------+-----------+-

CATMAINT 5 .282 .000 .282 3.477

COPY 925 11.673 4.907 16.581 914.838

COPY 60471 1017.939 .000 1017.939 65126.853

LOAD 2 .005 .000 .005 .012

LOAD 802 17.453 3.852 21.306 1990.150

MODIFY RECOVERY 59128 391.163 .000 391.163 15461.098

MODIFY STATISTICS 47 .120 .000 .120 1.276

QUIESCE 10 .015 .000 .015 .156

REBUILD INDEX 3 .027 .000 .027 .797

REBUILD INDEX 9 .082 .002 .085 2.502

RECOVER 9 .047 .000 .047 1.009

REORG 4 .022 .000 .022 1.942

REORG 28 2.075 .427 2.503 178.284

REPORT RECOVERY 3 .059 .000 .059 .454

RUNSTATS 33 .096 .000 .096 4.695

RUNSTATS 3575 44.477 92.323 136.801 1182.851

UNLOAD 1688 129.379 .000 129.379 989.501

DSNE610I NUMBER OF ROWS DISPLAYED IS 17

Here, you can see which utilities are zIIP enabled and how much the zIIPs saves us. The new kid on the block, COPY, actually saves us about 30% which is *not* to be sneezed at!

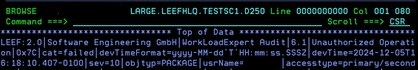

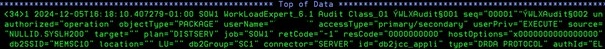

Checking in Batch

I add the “hidden” parameter STATSLVL(SUBPROCESS) to all my Utilities so that it outputs more info as I am a nerd and love more data! The numbers never all add up and so you must be a little careful, but here’s an example Image Copy JCL with output showing the counters and details:

//ICU005 EXEC PGM=DSNUTILB,REGION=32M,

// PARM=(DD10,'DD1DBCO0ICU005',,STATSLVL(SUBPROCESS))

//STEPLIB DD DISP=SHR,DSN=DSND1A.SDSNEXIT.DD10

// DD DISP=SHR,DSN=DSND1A.SDSNLOAD

//DSSPRINT DD SYSOUT=*

//* THRESHOLD REQUEST DB2CAT REQUEST

//SYSIN DD *

COPY TABLESPACE DSNDB01.SYSSPUXA

COPYDDN (SYSC1001)

FULL YES SHRLEVEL CHANGE

//SYSC1001 DD DISP=(NEW,CATLG,DELETE),UNIT=SYSDA,

// SPACE=(1,(352,352),RLSE),AVGREC=M,

// DSN=COPY.DD10.DSNDB01.SYSSPUXA.P0000.D25195

//SYSPRINT DD SYSOUT=*

Normal output:

DSNU000I 195 07:45:39.89 DSNUGUTC - OUTPUT START FOR UTILITY, UTILID = DD1DBCO0ICU005

DSNU1044I 195 07:45:39.90 DSNUGTIS - PROCESSING SYSIN AS EBCDIC

DSNU050I 195 07:45:39.90 DSNUGUTC - COPY TABLESPACE DSNDB01.SYSSPUXA COPYDDN(SYSC1001) FULL YES SHRLEVEL CHANGE

DSNU3031I -DD10 195 07:45:39.91 DSNUHUTL - UTILITY HISTORY COLLECTION IS ACTIVE.

LEVEL: OBJECT, EVENTID: 238604

DSNU3033I -DD10 195 07:45:39.92 DSNUHOBJ - SYSIBM.SYSOBJEVENTS ROWS INSERTED FOR OBJECT-LEVEL HISTORY

DSNU400I 195 07:46:23.09 DSNUBBID - COPY PROCESSED FOR TABLESPACE DSNDB01.SYSSPUXA

NUMBER OF PAGES=222109

AVERAGE PERCENT FREE SPACE PER PAGE = 2.75

PERCENT OF CHANGED PAGES = 0.00

ELAPSED TIME=00:00:43

DSNU428I 195 07:46:23.09 DSNUBBID - DB2 IMAGE COPY SUCCESSFUL FOR TABLESPACE DSNDB01.SYSSPUXA

Then the extra stuff, sorry about the formatting but WordPress is not good for batch output:

----------------------------------------------------------------------------------------------------------

U T I L I T Y S T A T I S T I C S

INTERVAL = UTILITY HISTORY CPU (SEC) = 0.000288 ZIIP = 0.000000

LEVEL = UTILINIT SUBPROCESS ELAPSED TIME (SEC) = 0.000

BUF POOL GETPAGES SYS SETW SYNC READS SYNC WRITE SEQ PREFCH LIST PREFCH DYN PREFCH

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

BP0 6 2

BP32K 2 1

TOTAL 8 3

----------------------------------------------------------------------------------------------------------

INTERVAL = UTILITY HISTORY CPU (SEC) = 0.000091 ZIIP = 0.000000

LEVEL = UTILINIT SUBPROCESS ELAPSED TIME (SEC) = 0.000

BUF POOL GETPAGES SYS SETW SYNC READS SYNC WRITE SEQ PREFCH LIST PREFCH DYN PREFCH

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

BP0 3

BP32K 1 1

TOTAL 4 1

----------------------------------------------------------------------------------------------------------

INTERVAL = OBJECT-LEVEL HISTORY CPU (SEC) = 0.000147 ZIIP = 0.000000

LEVEL = UTILINIT SUBPROCESS ELAPSED TIME (SEC) = 0.001

BUF POOL GETPAGES SYS SETW SYNC READS SYNC WRITE SEQ PREFCH LIST PREFCH DYN PREFCH

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

BP0 6 2 1

BP32K 2 1

TOTAL 8 3 1

----------------------------------------------------------------------------------------------------------

INTERVAL = UTILINIT CPU (SEC) = 0.002101 ZIIP = 0.000000

LEVEL = PHASE ELAPSED TIME (SEC) = 0.021

BUF POOL GETPAGES SYS SETW SYNC READS SYNC WRITE SEQ PREFCH LIST PREFCH DYN PREFCH

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

BP0 48 6 2

BP32K 9 5 1

BP32K 5 3

TOTAL 62 14 2 1

DDNAME DS OPEN DS CLOSE READ I/O WRITE I/O I/O CHECKS I/O WAIT END OF VOL

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

SYSPRINT 3 3

TOTAL 3 3

----------------------------------------------------------------------------------------------------------

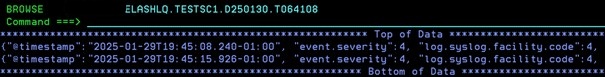

INTERVAL = COPYRDN0001 " CPU (SEC) = 0.008764 ZIIP = 0.273090

LEVEL = SUBPHASE ELAPSED TIME (SEC) = 43.033

BUF POOL GETPAGES SYS SETW SYNC READS SYNC WRITE SEQ PREFCH LIST PREFCH DYN PREFCH

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

BP0 223486 3 2713 1 4982

BP32K 73 50 1

BP32K 2 2

TOTAL 223561 55 2713 1 4982 1

----------------------------------------------------------------------------------------------------------

INTERVAL = COPYWDN0001 " CPU (SEC) = 0.357434 ZIIP = 0.000000

LEVEL = SUBPHASE ELAPSED TIME (SEC) = 43.032

----------------------------------------------------------------------------------------------------------

INTERVAL = Pipe Statistics

TYPE = COPY Data Pipe000

Records in: 222,110

Records out: 222,110

Waits on full pipe: 360

Waits on empty pipe: 0

----------------------------------------------------------------------------------------------------------

INTERVAL = COPY CPU (SEC) = 0.004909 ZIIP = 0.000000

LEVEL = PHASE ELAPSED TIME (SEC) = 43.167

BUF POOL GETPAGES SYS SETW SYNC READS SYNC WRITE SEQ PREFCH LIST PREFCH DYN PREFCH

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

BP0 22 7 1

BP32K 9 8

TOTAL 31 15 1

DDNAME DS OPEN DS CLOSE READ I/O WRITE I/O I/O CHECKS I/O WAIT END OF VOL

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

SYSPRINT 4 4

SYSC1001 1 1 37022 37022 38.793 1

TOTAL 1 1 37026 37026 38.793 1

----------------------------------------------------------------------------------------------------------

INTERVAL = UTILTERM CPU (SEC) = 0.000150 ZIIP = 0.000000

LEVEL = PHASE ELAPSED TIME (SEC) = 0.002

BUF POOL GETPAGES SYS SETW SYNC READS SYNC WRITE SEQ PREFCH LIST PREFCH DYN PREFCH

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

BP0 2

BP32K 2 1

TOTAL 4 1

----------------------------------------------------------------------------------------------------------

INTERVAL = COPY CPU (SEC) = 0.373401 ZIIP = 0.273090

LEVEL = UTILITY ELAPSED TIME (SEC) = 43.191

BUF POOL GETPAGES SYS SETW SYNC READS SYNC WRITE SEQ PREFCH LIST PREFCH DYN PREFCH

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

BP0 72 13 3

BP32K 20 14 1

BP32K 5 3

TOTAL 97 30 3 1

DDNAME DS OPEN DS CLOSE READ I/O WRITE I/O I/O CHECKS I/O WAIT END OF VOL

-------- ---------- ---------- ---------- ---------- ---------- ----------- ----------

SYSPRINT 7 7

SYSC1001 1 1 37022 37022 38.793 1

TOTAL 1 1 37029 37029 38.793 1

----------------------------------------------------------------------------------------------------------Here, you can easily see that the only phase with any zIIP values is the INTERVAL = COPYRDN0001 which is obviously the COPYR IBM mentioned, so that is 100% correct!

Bells and Whistles?

Here’s a complete list of all the z/OS parameters of interest in the IEAOPTxx dataset that are to do with zIIPs:

- IIPHONORPRIORITY=xxx – Default is YES.

- PROJECTCPU=xxx – Default is NO. Whether to report possible zIIP / zAAP offload data.

- HIPERDISPATCH=xxx – Default is YES.

- MT_ZIIP_MODE=n – Default is 1. This is the multithreading flag, changing it to 2 enables zIIP multithreading and, sort of, doubles your number of zIIPs.

- CCCAWMT=nnnn – Default is 3200 (3.2 ms). This is the time z/OS waits before waking up idle CPs or zIIPs.

- ZIIPAWMT=nnnn – Default is 3200 (3.2ms). This is the time to wait before a busy zIIP asks for help (IIPHONORPRIORITY=YES)

All of these parameters, with the possible exception of MT_ZIIP_MODE, should normally be left at their default values unless you really know what you are doing and what is running on your system! The multithreading parameter is naturally only supported on systems where the zIIP can go multithreading.

Anything Else?

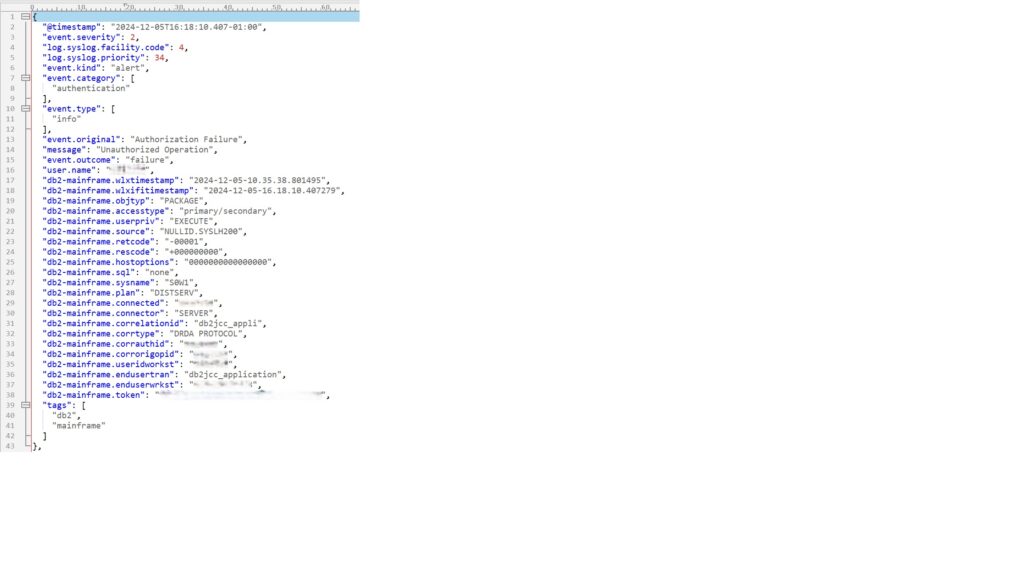

Security encryption can run on zIIP and, as everything is encrypted these days, it can be a very good idea. XML usage in COBOL, PL/I, Java, CICS, IMS etc., and Machine learning with ONNX. Python AI and ML workloads are 70% eligible. System Recovery Boost z15 and above and for SVC dumps z16 and above. The Java workload within z/OSMF can go up to 95% offload according to IBM internal tests. With the ratio of zIIP to CP now gone the sky is basically the limit!

Saving the Day?

What are zIIPs saving your firm? I would love to get a screen shot of the output of that SQL!

TTFN

Roy Boxwell