This month I wish to run through a bunch of, sadly, pretty common SQL coding mistakes that lot of beginners fall into. Not just humans either! AI is increasingly generating code snippets which developers simply cut-and-paste in the hope that it is:

- Correct SQL

- Does what they want it to do

- Runs acceptably fast!

In the Beginning was the SELECT

So, let us begin with the number 1 mistake all coders make at some time or other:

SELECT * from mytable ;

Yep, SELECT * FROM mytable. We have all done this, and in SPUFI it is the absolute norm! But if you are coding SQL that must run fast it is deadly… Why?

You are *never* alone in this world and that is doubly true of SQL running on big iron. Think buffer pools, think work space, think FTB etc. RAM is cheap these days, but if it is all being used it is no good to anyone else! The reason I mention space here, is when you code a SELECT * you are, pretty obviously, selecting every single column in the Table, View, MQT etc. This is pretty clearly going to cost you in CPU and I/O as Db2 must access every column in every row, format it, and return the value.

It gets worse, of course, as we live in a buffered world. All of this data is read and written into your precious little buffer pools and also is externalized into your darling little sort work spaces… You get the idea! Every column extra you add to the SELECT adds to the colossal amount of storage and cpu you are using. It gets even worse: If the optimizer sees this, it will sometimes, and pretty often, switch off using an index as it thinks “Well, I gotta get back every column value so an index might not really save cpu here!” Whereas a SELECT with just the three columns you really want, which also happen to be in an index, will then tell the Optimizer: Just scan this small, in comparison, index dataset! The savings can be vast.

It gets still even worse, of course… Coding a SELECT * in static or dynamic SQL will require a code change whenever you add or remove a column, as the cursor and/or program *is* aware of the columns and types of data being returned. Failure to do so is very bad news indeed!

Interesting little fact: Removing a column and then running with SELECT * will not actually cause a run time error (A negative SQLCODE), but it will cause you to possibly select garbage into fields. It is one of my pet bug bears that you can always have more columns on a FETCH than on a SELECT and Db2 does not warn or tell you!

Bad Index Usage

Using functions on columns tends to kill index access outright. If you can code around it – super! If you cannot then an IOE (Index On Expression) might be your only hope. No one likes IOEs though… The expression used must match 100% to the expression in the SQL and so for UPPER or LOWER it is not a problem but for SUBSTR(COL3 , 1 , 5) and SUBSTR(COL3 , 1 , 4) it will fail – and not tell you that the IOE was there but was not a 100% Match. EXPLAIN is naturally your friend here!

Missing Index Columns

Another absolute favorite of programmers is forgetting a join column… We have all done it, and so I am not going to throw the first stone here, but if you have two tables, both with four column indexes all with the same column names and/or functional content, then when you EXPLAIN and it joins with just one or two columns – Alarm bells should start ringing. Sometimes it must be like this but most times a JOIN / WHERE predicate has simply fallen under the table – These can be evil little problems, as sometimes the cardinality of the missing column is one so the returned data is all perfect… Nasty! Here our SQL PerformanceExpert for Db2 z/OS (SPX) software can really help out with the hunt!

Cartesian Join

If I had a Euro for every cartesian join I have seen in production I could retire! The major problem here, is sometimes you do not even see it, and sometimes the tables are so small the results are still OK. So these little problems fall under the radar until one day, that single row table you are mistakenly *not* joining to, grows up into 1,000,000 rows and then your little SQL just grinds to a halt, sucking the whole system down with it! These cartesian joins are sometimes caused by SQL changes where a programmer removes a JOIN to a table and accidentally deletes one row too many in the code. The syntax is still fine, the query might still run fine – especially in test – but then that little table grows… Boom! Here you need to use EXPLAIN, like in the index query, to see *exactly* how the Db2 Optimizer is actually joining all the tables. If you are using SYSIBM.SYSDUMMYx style tables, then you can sometimes want, and even expect, a cartesian join as you are 100% guaranteed to not ever have more than one row in that table! In all other cases, you had better make sure that there are correct JOIN … ON or WHERE criteria to avoid this pitfall.

Correlated Death

I read many years ago that “Correlated Queries are better and faster than Non-Correlated Queries unless they are not”. I always loved this advice as its right up there with “It Depends” in the list of non-helpful helpful advice! However, it has spawned an industry of correlated queries where programmers are 100% sure that writing SQL with correlated queries *all* the time is the absolute bee’s knees in performance. It isn’t! Here is a classic case:

SELECT C.CUSTOMER_ID, C.NAME

FROM CUSTOMER C

WHERE EXISTS (SELECT 1

FROM ORDERS O

WHERE O.CUSTOMER_ID = C.CUSTOMER_ID)

;

Please do not do this as the correlated query will be executed for *every* row in the CUSTOMER table, better could well be:

SELECT DISTINCT C.CUSTOMER_ID, C.NAME

FROM CUSTOMER C

, ORDERS O

WHERE O.CUSTOMER_ID = C.CUSTOMER_ID

;

Sharp eyes will have noticed the additional DISTINCT on the second query. Why? Well, we (I) do not know whether the chance of multiple rows joining on orders is wanted or not, probably not, so you must then add the DISTINCT to remove all the duplicates. This is actually one case where I would run both queries with EXPLAIN and in Production to compare side by side the results and CPU, I/O etc. and check that the join result was/is the better choice. Remember: It Depends! There are so many possible good reasons for a correlated join that it is nearly impossible to simply say “always bad or always good”. You must always test what you think might help as you might actually make things worse.

DISTINCTly a Bad Idea

I have so often seen queries like:

SELECT DISTINCT blah blah from blah

UNION

SELECT DISTINCT blah blah from blah

ORDER BY blah blah

;

Why do people do this still? Db2 is pretty clever but if you tell it to sort everything and remove duplicates and then you tell it again to do basically the same it will do it! In this case just remove the DISTINCTs and try and get the column order into the ORDER BY sequence so that the last sort is a tick faster – it will save you a ton of I/O and CPU. Remember: Only remove duplicates or sort if you really want to, but also remember: If you do not have an ORDER BY, the sequence of returned data is basically random! It might come back in beautiful sequential order just because of an index, and then tomorrow a different index could be used and POUF! Random order. Golden rule here: Use the minimum number of DISTINCTs and UNIONs and at least one ORDER BY if the order of returned rows is important. If the sort order is not important then do not add it just for the humans!

Bonus point: Some coders add DISTINCT because they get “duplicate” rows back from their query. Adding a DISTINCT fixes their problem, but all it actually does is hide it under a heap of CPU and I/O!

The Bachelor Problem – (Borrowed from Craig Mullins)

This is the failure to COMMIT. It might seem trivial at first but after you have output about 10,000 log records and you are up to over 1,000 row updates, it might be time to think about COMMITing all that work and freeing up all the rows of data and memory in the buffer pool you are blocking at the moment. Remember that COMMIT will cause you to lose Cursor position unless you have WITH HOLD defined on them and always remember to commit at Transaction boundaries – Never “half way through” a transaction for example! I also never let a subroutine issue a COMMIT – it must be the “main” program that controls COMMIT frequency. My rule of thumb here, is to COMMIT at 500 “transactions” that cause many more than 500 updates to the database. I also always use a variable that can be set at run time to raise or lower this frequency. Commits after ever update will kill you – Never Committing will also kill you – Pick a good middle path!

Too Much Data can hurt!

Following on from not COMMITing when you should, is the other cardinal sin of fetching the world to see if one row exists… I have really seen this query in *production*:

SELECT 1

FROM SYSIBM.SYSTABLES

;

This was being used as a “ping” to see if the z/OS Db2 sub-system was up and accepting work! Utterly crazy and a grand example where adding LIMIT 1 would be a great help but really, why run this query at all? These days the z/OS Db2 is *always* there and so the query works 99.999% of the time and the 0.001% where it fails, well, the world is ending anyways! Please review all queries to make sure they are only returning the rows you wish to actually process!

Einstein was Right!

He is famously quoted as saying “Insanity is doing the same thing over and over and expecting different results, like rebooting Windows PCs” – This has at least two or three areas where it hits the SQL Db2 world!

- Automation! If you do the same thing every morning every day – automate it!

- If you are doing COMMIT, ROLLBACK, ROLLBACK after ever transaction – Stop!

- If you are doing CLOSE CURSOR at CICS start of transaction – Stop!

The first point is a no-brainer. If you can write a little script, or even a program, that stops you wasting 30 minutes every day, it will add up very quickly! Remember the clever DBA gets the machine to do his/her work and not the other way around!

Number two is, sadly, a real life Hibernate problem that I discovered. The frame work, after a COMMIT, always issued two ROLLBACKs – I have mentioned before, that frameworks are all well and good but when you have *no* idea what is going on you have a problem brewing. In this particular case, the COMMIT and ROLLBACKs were not being traced, as they are not really SQL, so you had to look a bit deeper under the covers to see a crazy number of ROLLBACKs. Remember that Db2 is not the cleverest piece of silicon on the block … When it gets told ROLLBACK it dutifully saunters off and checks against the log to see if anything has been updated since the last COMMIT, and when you issue a ROLLBACK immediately after the last ROLLBACK Db2 goes off and checks again … This is a tremendous waste of CPU and I hope none of you out there “find” this little gem …

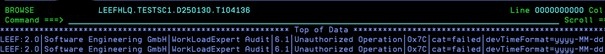

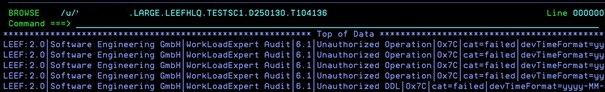

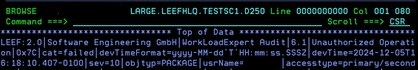

The third point was also found live in production. Way, way, way back when some bright CICS programmer had the brilliant idea to start every transaction with a CLOSE CURSOR in case the cursor had been left OPEN by the last transaction … We all know this cannot happen, right? Anyways, this genius got his/her way and *every* CICS transaction issued a CLOSE CURSOR and got an SQLCODE -501 “The cursor you are closing was not open” – surprise, surprise! Just think, if you had over 20,000,000 CICS transactions per day how much CPU just this tiny error handling path would cost you? Again, it is an SQLCODE that needs to be traced and they are not so easy to handle, but it is also doable. Here, check for how many weird or even “normal” SQLCODEs you get every day from all your running SQL – The contents can be quite scary!!!

No-one eats Stale Bread, do they?

But some people never care about Stale Statistics. If your STATSLASTTIME column in Real-Time Statistics (RTS) is over five years ago, and you have STATSINSERTS, STATSDELETES, STATSUPDATES or STATSMASSDELETES in the RTS, then you have to wonder, “Would a RUNSTATS possibly help me?”. Remember to always do a before/after check of the access paths – Here our Bind ImpactExpert for Db2 z/OS (BIX) can really help you.

Do you like Feedback?

Have you checked your SYSSTATFEEDBACK table to see what the Optimizer is crying out for? It is a gold mine of required data for your RUNSTATS, but beware, dear friends … It also holds the SQL query from Hell that the Management tried running once and got hopelessly wrong! In other words: review the contents, take a copy of the recommendations, delete them all and wait a while … If, after a couple of weeks, that row comes back into the SYSSTATFEEDBACK – BINGO! This is probably data that the Optimizer really needs and not a one-off query from hell! Here, our Real-Time DBAExpert for Db2 z/OS (RTDX) can really help you out!

Just the Facts, Ma’am

Well, I just wanted to run through a bunch of common errors and problems that I have seen around the world! I would love to hear from you if you have any other “Top Ten” style lists of evil things that have happened!

TTFN,

Roy Boxwell