This month I thought I would let an AI do all the work… here is the result from the following prompt “write me a Db2 for z/OS newsletter about an interesting topic either to do with SQL or utility usage.”

Topic: Getting the Most from Temporal Tables in DB2 for z/OS

Why Temporal Tables Matter

In many industries—especially banking, insurance, and government—regulations require that you be able to reconstruct historical data exactly as it was at a given point in time. Prior to DB2 10 for z/OS, this was often done with custom history tables, triggers, and a lot of application code.

Today, temporal tables make this far simpler, with built-in DB2 features to maintain and query historical versions of data.

System-Period vs. Application-Period Temporal Tables

DB2 supports two main types of temporal tables:

- System-period temporal tables – DB2 automatically maintains historical versions of rows when changes occur. Two timestamp columns (ROW BEGIN and ROW END) and a TRANSACTION START ID are used to track validity.

- Application-period temporal tables – The application controls the validity period by providing its own start and end timestamps. Useful when the “effective” date of data is different from the transaction date.

You can also combine them into bi-temporal tables for the ultimate in time-based data tracking.

Basic Example: System-Period Temporal Table

CREATE TABLE CUSTOMER (

CUST_ID INTEGER NOT NULL,

NAME VARCHAR(100),

STATUS CHAR(1),

ROW_BEGIN TIMESTAMP(12) GENERATED ALWAYS AS ROW BEGIN NOT NULL,

ROW_END TIMESTAMP(12) GENERATED ALWAYS AS ROW END NOT NULL,

TRAN_START_ID TIMESTAMP(12) GENERATED ALWAYS AS TRANSACTION START ID

NOT NULL,

PERIOD SYSTEM_TIME (ROW_BEGIN, ROW_END)

) WITH SYSTEM VERSIONING;Once the table is system-versioned, DB2 automatically creates and manages a history table. You can query historical data using the FOR SYSTEM_TIME clause:

SELECT *

FROM CUSTOMER

FOR SYSTEM_TIME AS OF TIMESTAMP('2024-07-01-10.00.00')

WHERE CUST_ID = 1001;Performance Considerations

- Indexing Matters: DB2 will maintain history indexes for you, but ensure they support your most common time-based predicates to avoid costly table scans.

- Partitioning History Tables: If your history tables grow large, consider partitioning by date to keep queries efficient.

- Utility Implications: REORG, COPY, and RUNSTATS operate on both the base and history tables. REORG with SHRLEVEL CHANGE can keep them online, but be mindful of increased elapsed time when history data is large.

Utility Tip: REORG and Temporal Tables

When running REORG on a temporal table, DB2 handles both the base and history table in a single execution if they are bound together. However, if you need to reorganize only the history table (for example, to improve access to older data without touching current production data), you can explicitly REORG just the history table.

Example:

REORG TABLESPACE HISTTS <-- History table's tablespace

SHRLEVEL CHANGE

SORTDATA

STATISTICS INDEX(ALL);This can help reduce impact on current transactions while still maintaining query performance for historical reporting.

Best Practices

- Use temporal queries rather than joining base and history tables manually; it ensures optimizer support and cleaner code.

- Regularly RUNSTATS on both base and history tables to keep the optimizer informed about growth patterns.

- For reporting, consider materialized query tables (MQTs) on top of temporal queries for faster access to common time-based reports.

Final Thought:

Temporal tables take much of the heavy lifting out of managing historical data in DB2 for z/OS. When paired with smart indexing, partitioning, and utility usage, they can deliver both compliance and performance—without the spaghetti code of the old days.

Maintaining Large Temporal Table Environments in DB2 for z/OS

(Utility Scheduling & Best Practices)

When you start using temporal tables—especially system-period or bi-temporal—your history tables grow quickly. This can cause issues if utilities aren’t tuned for their unique workload patterns.

Here’s a real-world maintenance workflow you can adapt.

1. Understand the Data Growth Pattern

- Base table – Moderate growth, mainly current records.

- History table – Potentially huge growth, as every UPDATE or DELETE adds rows.

- Data skew – History tables are often append-heavy with few deletes.

Before setting schedules, run:

SELECT COUNT(*), MIN(ROW_BEGIN), MAX(ROW_END)

FROM HISTORY_TABLE;This gives you volume and time-span, helping you plan partition-level REORGs.

2. Recommended Utility Workflow

Nightly (or per batch cycle):

- RUNSTATS on base table only (small, keeps optimizer fresh for OLTP).

RUNSTATS TABLESPACE BASETS TABLE(ALL) INDEX(ALL) UPDATE ALL;Weekly:

- REORG history table partitions with recent activity.

- Use PART to target only active partitions.

- SHRLEVEL CHANGE to keep reporting jobs running.

REORG TABLESPACE HISTTS PART 15:17

SHRLEVEL CHANGE

SORTDATA

STATISTICS INDEX(ALL);Monthly:

- COPY both base and history tables for backup compliance.

COPY TABLESPACE BASETS FULL YES

COPY TABLESPACE HISTTS FULL YESQuarterly:

- REORG full history table if query performance declines or RUNSTATS indicate high clustering ratio loss.

- RUNSTATS on full history table.

- Consider MERGECOPY to consolidate image copies.

3. Tips to Keep Utilities Efficient

- Use LISTDEF to dynamically select only affected partitions:

LISTDEF HISTPARTS INCLUDE TABLESPACE HISTTS

PARTLEVEL

PARTCOND (LASTUSED > CURRENT DATE - 7 DAYS);- Parallelism – Large history REORG jobs benefit from PARALLEL keyword if CPU and I/O capacity allow.

- Inline Stats – For history tables, you can take inline stats during REORG to avoid a separate RUNSTATS step.

- Automation – Many shops integrate this with DSNACCOX to decide when REORG or RUNSTATS are needed.

4. Example End-to-End Utility JCL Snippet

//STEP1 EXEC DSNUPROC,SYSTEM=DSN1,UID='HIST.REORG'

//SYSIN DD *

LISTDEF HIST_PARTS INCLUDE TABLESPACE HISTTS

PARTLEVEL

PARTCOND (LASTUSED > CURRENT DATE - 7 DAYS)

REORG LISTDEF HIST_PARTS

SHRLEVEL CHANGE

SORTDATA

STATISTICS INDEX(ALL) UPDATE ALL

/*

//STEP2 EXEC DSNUPROC,SYSTEM=DSN1,UID='HIST.COPY'

//SYSIN DD *

COPY TABLESPACE HISTTS FULL YES

/*

//STEP3 EXEC DSNUPROC,SYSTEM=DSN1,UID='BASE.RUNSTATS'

//SYSIN DD *

RUNSTATS TABLESPACE BASETS TABLE(ALL) INDEX(ALL) UPDATE ALL

/*5. Key Takeaways

- Don’t treat history tables like “just another table”—their size and access patterns demand dedicated utility schedules.

- Use partition-level maintenance wherever possible to cut elapsed time.

- Integrate RUNSTATS and REORG with automation tools to keep the optimizer happy without manual intervention.

Whaddya Think?

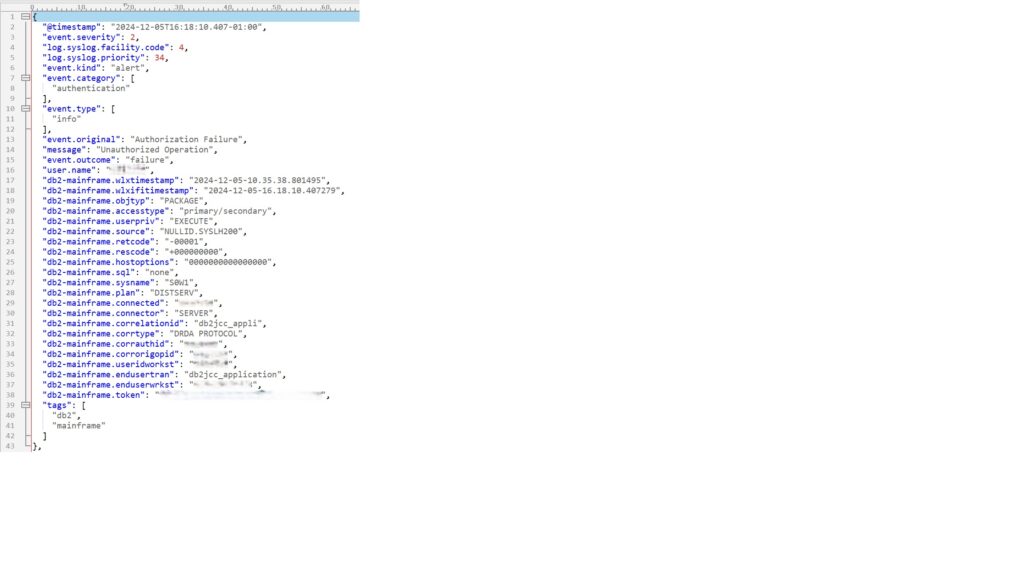

Well, I do not think I will unemployed anytime soon based on this, although, at first glance, it is not that bad. Then you look at the details and it starts to all go horribly wrong…

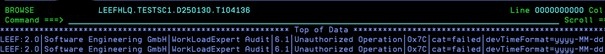

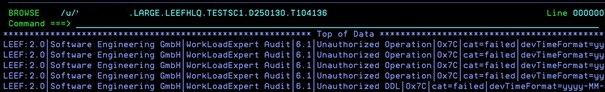

- Table creation does not know of the keyword SYSTEM VERSIONING, this hints to the fact that the HIST table is not actually automatically created either!

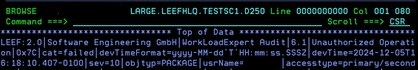

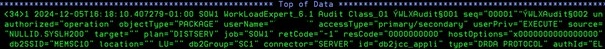

The example SELECT SQL naturally dies with a:

DSNT408I SQLCODE = -20524, ERROR: INVALID PERIOD SPECIFICATION OR PERIOD

CLAUSE FOR PERIOD SYSTEM_TIME. REASON CODE = 02until you manually create the history table and issue the required ALTER:

CREATE TABLE HIST_CUSTOMER (

CUST_ID INTEGER NOT NULL,

NAME VARCHAR(100),

STATUS CHAR(1),

ROW_BEGIN TIMESTAMP(12) NOT NULL,

ROW_END TIMESTAMP(12) NOT NULL,

TRAN_START_ID TIMESTAMP(12) NOT NULL

)

;

COMMIT

;

ALTER TABLE CUSTOMER

ADD VERSIONING USE HISTORY TABLE HIST_CUSTOMER

;- LISTDEF does not support conditional partitions (PARTCOND) although the idea is pretty cool.

- Utility statements do not end in a semi-colon.

- Finally, and the very worst mistake of all: seven uses of the capital B! The audacity!!!

What About You?

Have any of you had “interesting” AI suggestions in the world of Db2 for z/OS? I would love to hear from you!

TTFN,

Roy Boxwell