Or: How I Learned to Stop Worrying and Love the Auditor

A guideline in 5 big Steps to Audit for Personal Data Protection

First up, this is not another Audit review and health check sell! We, and I mean especially in the European Union (EU), are about to get the biggest update to “my data”, “my right to know” and “my right to forget (delete)” that has ever happened, when the General Data Protection Regulation (GDPR) Kicks off on May 25th, 2018.

Who cares?

Well, we all should really! Very soon hordes of people could well start demanding to know if you have any data about them and if so where it is and who you give it to. Personal Data will be big business!

Age of Consent

Just assuming all is ok is also no longer valid or lawful – any personal data that you keep must be kept with the *active* consent of the data owner… This is going to get awfully messy awfully quickly! Do not forget that the definition of personal data has also changed quite a bit e.g. IP Addresses. For the record here is the actual text in the GDPR regulations:

Art. 4 GDPR Definitions

For the purposes of this Regulation:

1. ‘personal data’ means any information relating to an identified or identifiable natural person (‘data subject’); an identifiable natural person is one who can be identified, directly or indirectly, in particular by reference to an identifier such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person;

And

Art. 9 GDPR Processing of special categories of personal data

1. Processing of personal data revealing racial or ethnic origin, political opinions, religious or philosophical beliefs, or trade union membership, and the processing of genetic data, biometric data for the purpose of uniquely identifying a natural person, data concerning health or data concerning a natural person’s sex life or sexual orientation shall be prohibited.

Security by design and Security of processing

Very important are Articles 25 and 32:

Art. 25 GDPR Data protection by design and by default

1. Taking into account the state of the art, the cost of implementation and the nature, scope, context and purposes of processing as well as the risks of varying likelihood and severity for rights and freedoms of natural persons posed by the processing, the controller shall, both at the time of the determination of the means for processing and at the time of the processing itself, implement appropriate technical and organisational measures, such as pseudonymisation, which are designed to implement data-protection principles, such as data minimisation, in an effective manner and to integrate the necessary safeguards into the processing in order to meet the requirements of this Regulation and protect the rights of data subjects.

2. The controller shall implement appropriate technical and organisational measures for ensuring that, by default, only personal data which are necessary for each specific purpose of the processing are processed. That obligation applies to the amount of personal data collected, the extent of their processing, the period of their storage and their accessibility. In particular, such measures shall ensure that by default personal data are not made accessible without the individual’s intervention to an indefinite number of natural persons.

Last part of Paragraph two is important here.

Art. 32 GDPR Security of processing

1. Taking into account the state of the art, the costs of implementation and the nature, scope, context and purposes of processing as well as the risk of varying likelihood and severity for the rights and freedoms of natural persons, the controller and the processor shall implement appropriate technical and organisational measures to ensure a level of security appropriate to the risk, including inter alia as appropriate:

1. the pseudonymisation and encryption of personal data;

2. the ability to ensure the ongoing confidentiality, integrity, availability and resilience of processing systems and services;

3. the ability to restore the availability and access to personal data in a timely manner in the event of a physical or technical incident;

4. a process for regularly testing, assessing and evaluating the effectiveness of technical and organisational measures for ensuring the security of the processing.

2. In assessing the appropriate level of security account shall be taken in particular of the risks that are presented by processing, in particular from accidental or unlawful destruction, loss, alteration, unauthorised disclosure of, or access to personal data transmitted, stored or otherwise processed.

Here paragraphs 1.2, 1.4 and 2 are the biggies!

A small or large fine for you today?

The fines are also amazingly high. First, for the “minor” problem of being over 72 hours late when data leaks have occurred (a breach), is 2% of global turnover or €10,000,000 – whichever is *higher* and, if you are really naughty, like disregarding basic data laws, moving data abroad or ignoring an individual’s rights then you get hit for 4% of global turnover or €20,000,000 – again whichever is *higher*

First up?

I, for one, do not want to be the first company that makes the headlines… How about you?

Who are you afraid of?

Now I know that most of the talk about GDPR is “right to data” for the general public but we all know, as IT specialists, that the people to be really afraid of are ex-employees who did not leave on happy terms. They know *exactly* where the knife could best be put… They know exactly where, and on which platforms, all of the data really is.

Protect yourself – Due diligence

What can you do? Well the first thing is to actually understand what GDPR means to you and your firm’s data. Then you must be able to prove to auditors that you tried your very best. We will all probably get hacked at some time, but if you tried your best it is enough. Using all of the data you gather in a post-mortem, or for forensics, is also a very good idea as can be seen in paragraphs 2.3 and 2.4 below:

Art. 83 GDPR General conditions for imposing administrative fines

1. Each supervisory authority shall ensure that the imposition of administrative fines pursuant to this Article in respect of infringements of this Regulation referred to in paragraphs 4, 5 and 6 shall in each individual case be effective, proportionate and dissuasive.

2. Administrative fines shall, depending on the circumstances of each individual case, be imposed in addition to, or instead of, measures referred to in points (a) to (h) and (j) of Article 58(2). When deciding whether to impose an administrative fine and deciding on the amount of the administrative fine in each individual case due regard shall be given to the following:

1. the nature, gravity and duration of the infringement taking into account the nature scope or purpose of the processing concerned as well as the number of data subjects affected and the level of damage suffered by them;

2. the intentional or negligent character of the infringement;

3. any action taken by the controller or processor to mitigate the damage suffered by data subjects;

4. the degree of responsibility of the controller or processor taking into account technical and organisational measures implemented by them pursuant to Articles 25 and 32;

The list goes on after these of course…

Db2 Audit – a new way?

Most sites use SMF and do daily cuts to then offload to a repository system of some sort on some sort of hardware. The problem here is that the amount of SMF data you are generating can swamp you and this method is not nearly quick enough. Waiting a day is 24 hours of the 72 that are available to you…

Faster, Better, Securer?

Can you do it faster? Can you do it yourself faster? Can you do it better? Maybe…

If you can handle OPx and a bit of High Level Assembler then “Bob’s your uncle!” as long as Bob is your Auditor of course…There are more than enough examples in the web about how to write and do OPx reading (There are even vendors that are willing to sell you their software 🙂 )

A guideline in 5 big steps to Audit in a new way for Personal Data protection :

1 – To do list – IFI commands, IFCIDS & Audit Class

Create a program that can issue the required IFI commands to start and stop traces and to issue the READA and READS IFI calls that you wish to have. If you don’t know which Audit Class is which IFCID – Here’s my handy list:

| Audit Class | IFCIDs | What | Why |

| 1 | 140 | Authorization failures | Possible brute force password attack |

| 2 | 141 | GRANTs and REVOKEs | Possible temporary raising of privilege |

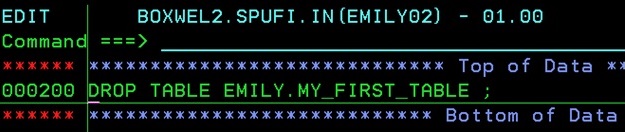

| 3 | 142 | CREATE/ALTER/DROP of table with AUDIT attribute | Removing the AUDIT attribute is a big red flag |

| 4 | 143 | 1st Change of table with AUDIT attribute | Sensitive data updates |

| 5 | 144 | 1st Select of table with AUDIT attribute | Sensitive data usage |

| 6 | 145 | BIND/PREPARE using table with AUDIT attribute | Possible usage of sensitive data |

| 7 | 55, 83, 87, 169, 319 | SET CURRENT SQLID, end of identify, end of signon CICS/IMS | Elevation of privilege |

| 8 | 23, 24, 25, 219, 220 | Db2 Utilities | UNLOADing data to where? |

| 9 | 146, 392 | For customer use | n/a |

| 10 | 269, 270, 271*

271 is not started automatically with Class 10 | Establish reuse trusted context, trusted context CREATE/ALTER and Column Mask/Row Permission CREATE/DROP/ALTER | Anything with MASKs or ROW Permissions must be checked |

| 11 | 361 | Successful access | Only makes sense if working with AUDIT POLICY SYSADMIN or DBADMIN |

So, what is missing from this picture?

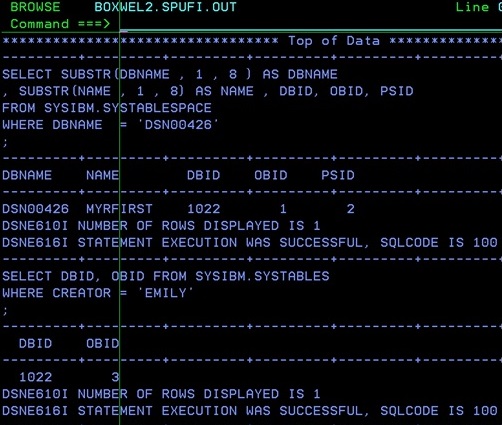

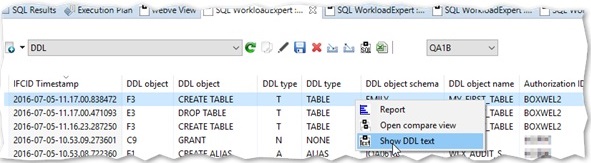

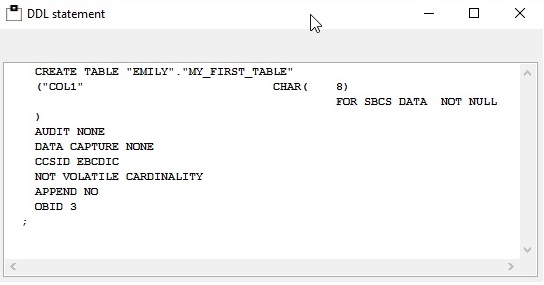

Well, what about the COMMANDs to actually see what people are issuing on the machine? So you must also add IFCIDs 90 and 91. Then you do not see any of the DDL so you must start IFCID 62 to get that as you do want to see what people are ALTERing, creating and dropping don’t you?

2 – All done in design?

Once you have a system that gathers all these you must buffer them in memory and then do one of two things:

1) Write them out to a file for post-processing and enrichment later

2) Directly write them to your SIEM system of choice

I am not a fan of number 2 because if the SIEM system cannot accept the data then your data is lost…

With No. 1 you can keep processing even if the SIEM system cannot ingest, for whatever reason, and as long as you trigger yourself every 5 – 10 minutes or when the OPx buffer is full then that is “real time” enough for me!

3 – Care for something else for the weekend?

Plus you can enrich the data with extra stuff that the naked IFCIDs do not have attached to them e.g. map dbid/psid to a real database and tablespace etc.

Store all of this data in a bunch of Db2 tables

then kick-off a Db2 to a LEEF re-formatter program that reads all the audit data that has been collected since last time

writes it all out.

4 – Code Page – don’t forget the Code Page

Then you must do an ICONV for code page conversion, swiftly followed by a gzip to get it down to size and then copy it down to a USS file ready for your SIEM system to ingest its prey.

5 – Done, Finished and Complete

To prove that this system is always available you should also schedule a simple

GRANT SELECT ON SYSIBM.SYSDUMMY1 TO PUBLIC;

Every morning at 06:00 which you should see in the output every day. Remember – Due Diligence!

So now you are done and finished and can let the SIEM system do the completion!

As always, any questions or comments would be most welcome!

TTFN,

Roy Boxwell

PS: Please note that the bold of parts of the GDPR text is only from me!