This month I have a really nice – and rather long – technical newsletter to sink your teeth into!

The problem: Too low internal PIECE limit in DB2

The solution: Set of SQL queries for my newsletter readers to really find the bad guys

– Create DBD diagnose cards

– Cut and paste the control cards into some DB2 Utility JCL

– Do “F OBDINNUM” in the REPAIR job output

– Automatic parse the millions of lines of REPAIR output and *only* outputs OBIDs

Whole SQL.txt JCL.txt REXX.txt

Reach your real maximum number of PIECEs!

The Problem: Too low internal PIECE limit in DB2

We start with a little APAR that IBM did way back in 2013:

2013 IBM APAR

PM75150: ALLOW MAXIMUM NUMBER OF PIECES FOR AN NPI OF LARGE TABLE SPACE, INDEPENDENT OF TABLE SPACE MAXIMUM NUMBER OF DATASETS

| Reported release | A10 |

| Status | CLOSED UR1 |

| PE | NoPE |

| HIPER | NoHIPER |

| Special Attention | NoSpecatt |

| Submitted date | 2012-10-16 |

| Closed date | 2013-02-25 |

| Last modified date | 2013-04-02 |

So far, so good. Looks like any run of the mill standard DB2 10 fix of “no real importance”… until you read the “small print” that is!

******************************************************************************************************************

USERS AFFECTED: All DB2 users of Non-Partitioned Index (NPI) on a large table space.

******************************************************************************************************************

PROBLEM DESCRIPTION:

For a large table space, the maximum number of pieces for an NPI is incorrectly inherited from the

maximum number of partitions of the table space. When inserting data to a table or creating the NPI,

the customer may receive the following message,

DSNT501I DMBC DSNKINSL RESOURCE UNAVAILABLE CORRELATION-ID=QFSBTC036

CONNECTION-ID=SERVER LUW-ID= GAF263F9.C741.120818101839=561408

REASON 00C9009D

TYPE 00000201

****************************************************************************************************************

RECOMMENDATION:

****************************************************************************************************************

For an NPI on a LARGE(5-byte RID) table space, the index maximum number of pieces is independent of the table space maximum number of partitions, it is calculated from index page size and piece size as the following:

Maximum NPI number of pieces = MINIMUM( 4096, 2^32 / (index piece size/index page size) )

In other words, DB2 set the internal piece limit way too low!

This is very bad news if you think you have 4,096 pieces but you really have only 256!

Now the APAR carries on with helpful hints and tips (Quoting here from the APAR text):

Top of page

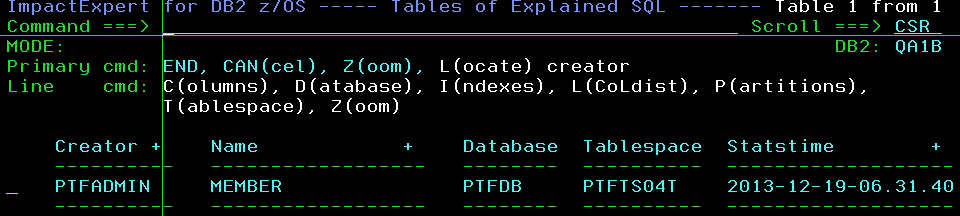

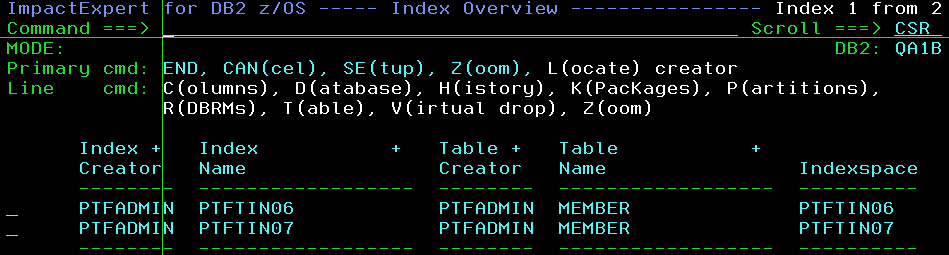

1. run a query, such as the sample query below, to identify the databases, table spaces and indexes,

SELECT TS.DBNAME AS DB_NAME,

TS.NAME AS TS_NAME,

IX.NAME AS INDEX_NAME,

IX.CREATOR AS IX_CREATOR,

HEX(IX.OBID) AS INDEX_OBID,

IX.CLOSERULE AS CLOSERULE

FROM SYSIBM.SYSINDEXES IX,

SYSIBM.SYSTABLES TB,

SYSIBM.SYSTABLESPACE TS

WHERE TB.NAME = IX.TBNAME AND

TB.CREATOR = IX.TBCREATOR AND

TB.TSNAME = TS.NAME AND

TB.DBNAME = TS.DBNAME AND

(TS.PARTITIONS > 254 OR

TS.TYPE = 'L' OR

TS.TYPE = 'K' OR

TS.DSSIZE > 0) AND

TS.PARTITIONS <> 0 AND

(TS.STATUS = 'C' OR

NOT ((IX.INDEXTYPE = 'P' OR

IX.INDEXTYPE = 'D') AND

TB.PARTKEYCOLNUM <>0)) AND

TS.DBNAME <> 'DSNDB06'

ORDER BY IX.OBID;+-------------------------------------------------------------+

|DB_NAME |TS_NAME |INDEX_NAME|IX_CREATOR|INDEX_OBID|CLOSERULE |

+-------------------------------------------------------------+

|DB161410|XTB10000|I_NODEIDXT|SC161410 |0056 |N |

|DB161410|TU161410|I_DOCIDTB1|SC161410 |0058 |N |

...

2. run REPAIR DBD DIAGNOSE against all databases from the above query.

For example REPAIR DBD DB161410. By searching OBDINNUM in the REPAIR DBD output, it has the following mismatch,

DSNU916I DSNUEDFT - OBDS DIFFER AT OFFSET X'00000052'

OBDINNUM - INFORMATION NOT IN DB2 CATALOG

DSNU904I DSNUEDFT - EXISTING VALUE X'0100'

DSNU905I DSNUEDFT - REBUILT VALUE X'1000'

DSNU913I DSNUEDDR - OBD X'0056' COMPARISON COMPLETE -- 0 ERRORS

which confirms the index index I_NODEIDXT with OBID X'0056' has incorrect maximum number of index pieces.

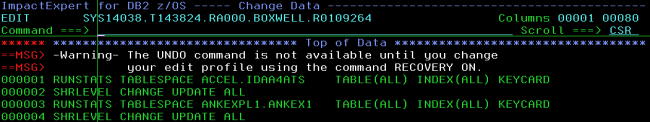

3. fix these indexes found in the above repair DBD output by altering the CLOSE attribute of the identified indexes from their current value and then altering the CLOSE attribute back to their original value. The index name and index attribute information can be found in the query result at step 1.

for example, the index SC161410.I_NODEIDXT has the CLOSE attribute CLOSERULE = N. To correct the maximum number of index pieces for I_NODEIDXT, run the following DDL

ALTER INDEX index SC161410.I_NODEIDXT CLOSE YES

ALTER INDEX index SC161410.I_NODEIDXT CLOSE NO

So what I decided to do, was to create a set of queries for my newsletter readers to make this process a tad easier to really find the bad guys!

Top of page

The solution: Set of SQL queries for my newsletter readers to really find the bad guys

Whole SQL.txt JCL.txt REXX.txt

Create DBD diagnose cards

First step is nearly the same SQL as in 1. Above:

-- CREATE DBD DIAGNOSE CARDS FOR ANY DATABASES THAT ARE CANDIDATES

SELECT DISTINCT SUBSTR(' REPAIR OBJECT LOG YES DBD DIAGNOSE DATABASE '

CONCAT STRIP(TS.DBNAME) , 1 , 54 )

FROM SYSIBM.SYSINDEXES IX,

SYSIBM.SYSTABLES TB,

SYSIBM.SYSTABLESPACE TS

WHERE TB.NAME = IX.TBNAME

AND TB.CREATOR = IX.TBCREATOR

AND TB.TSNAME = TS.NAME

AND TB.DBNAME = TS.DBNAME

AND (TS.PARTITIONS > 254

OR TS.TYPE = 'L'

OR TS.TYPE = 'K'

OR TS.DSSIZE > 0)

AND TS.PARTITIONS <> 0

AND (TS.STATUS = 'C'

OR NOT ((IX.INDEXTYPE = 'P'

OR IX.INDEXTYPE = 'D')

AND TB.PARTKEYCOLNUM <> 0))

AND TS.DBNAME <> 'DSNDB06'

;It returns this style of output (If you have *no* output then congratulations you have *no* problem!):

REPAIR OBJECT LOG YES DBD DIAGNOSE DATABASE A140XO82

REPAIR OBJECT LOG YES DBD DIAGNOSE DATABASE ANKDB1

REPAIR OBJECT LOG YES DBD DIAGNOSE DATABASE ANKDB3

.

.

.

Top of page

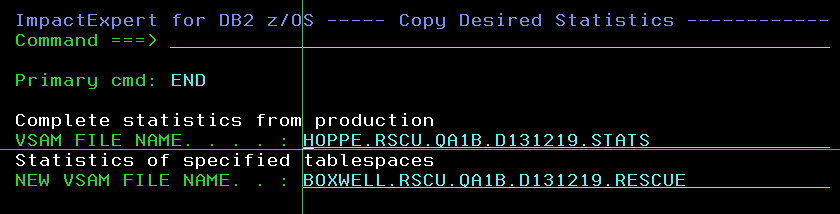

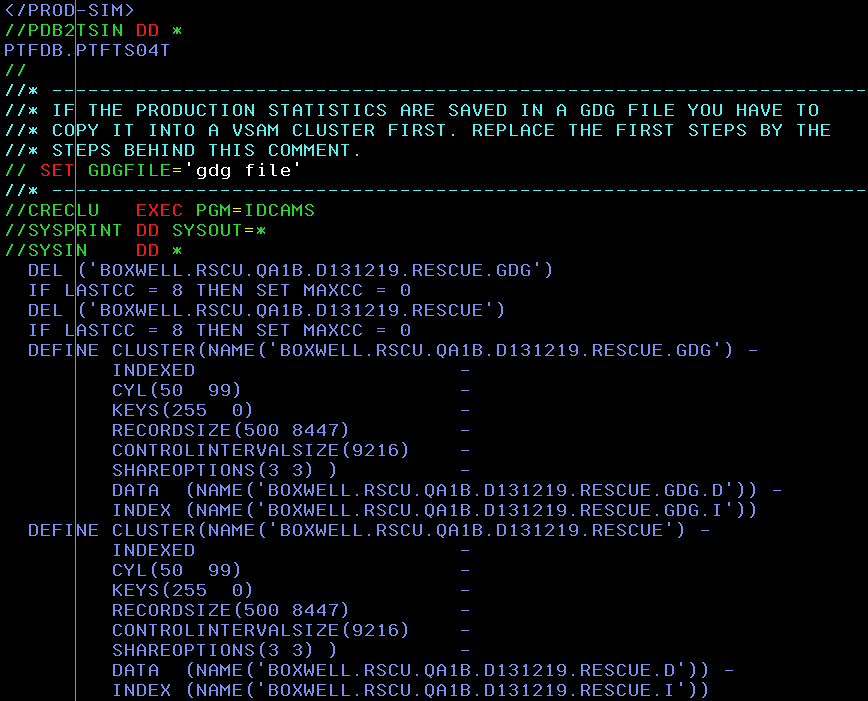

Cut and paste these control cards into some DB2 Utility JCL

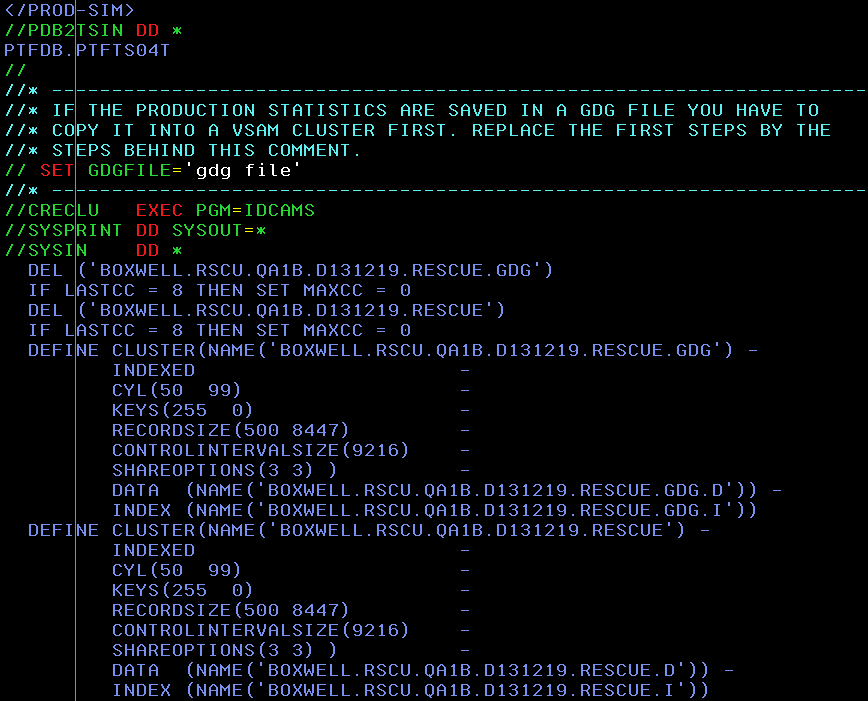

Now you must cut and paste these control cards into some DB2 Utility JCL and get them all run.

The next query lists out all the index data you need from the above databases:

-- NOW LIST INDEX OBID'S ON THE ABOVE DATABASES THAT MUST BE SEARCHED

-- FOR IN THE REPAIR SYSOUT BY USING "F OBDINNUM" AND CHECKING IF THE

-- OBID'S MATCH

SELECT SUBSTR(TS.DBNAME , 1 , 8) AS DB_NAME,

HEX(IX.OBID) AS INDEX_OBID,

SUBSTR(STRIP(IX.CREATOR) CONCAT '.' CONCAT

STRIP(IX.NAME) , 1 , 71) AS INDEX

FROM SYSIBM.SYSINDEXES IX,

SYSIBM.SYSTABLES TB,

SYSIBM.SYSTABLESPACE TS

WHERE TB.NAME = IX.TBNAME

AND TB.CREATOR = IX.TBCREATOR

AND TB.TSNAME = TS.NAME

AND TB.DBNAME = TS.DBNAME

AND (TS.PARTITIONS > 254

OR TS.TYPE = 'L'

OR TS.TYPE = 'K'

OR TS.DSSIZE > 0)

AND TS.PARTITIONS <> 0

AND (TS.STATUS = 'C'

OR NOT ((IX.INDEXTYPE = 'P'

OR IX.INDEXTYPE = 'D')

AND TB.PARTKEYCOLNUM <> 0))

AND TS.DBNAME <> 'DSNDB06'

ORDER BY 1 , 2

;

The output looks like:

---------+---------+---------+---------+----------

DB_NAME INDEX_OBID INDEX

---------+---------+---------+---------+---------

A140XO82 000C USER001.RESB~0

ANKDB1 000A ANKDB1.ANKIX11

ANKDB3 0008 ANKDB3.ANKIX3 . . .

Do “F OBDINNUM” in the REPAIR job output

Now comes the really horrible bit – you must now do “F OBDINNUM” in the REPAIR job output and see if you have any matches for the above indexes in the relevant database. Again, if you have none then congratulations, you have no problem!

If you find data like this (just as in the IBM APAR description):

DSNU916I DSNUEDFT - OBDS DIFFER AT OFFSET X'00000052'

OBDINNUM - INFORMATION NOT IN DB2 CATALOG

DSNU904I DSNUEDFT - EXISTING VALUE X'0100'

DSNU905I DSNUEDFT - REBUILT VALUE X'1000'

DSNU913I DSNUEDDR - OBD X'0056' COMPARISON COMPLETE -- 0 ERRORS

Top of page

then you must now do the next step to generate the corrective ALTER flip-flops:

-- CREATE DRIVER TABLE FOR CARTESIAN JOIN PROCESSING

DECLARE GLOBAL TEMPORARY TABLE DRIVER (NUMBER SMALLINT) ;

INSERT INTO SESSION.DRIVER VALUES 1 ;

INSERT INTO SESSION.DRIVER VALUES 2 ;

INSERT INTO SESSION.DRIVER VALUES 3 ;

INSERT INTO SESSION.DRIVER VALUES 4 ;

INSERT INTO SESSION.DRIVER VALUES 5 ;

INSERT INTO SESSION.DRIVER VALUES 6 ;

-- NOW GENERATE CORRECTIVE CLOSE FLIPS ONE FOR NO -> YES

-- AND ONE FOR YES -> NO

-- NO -> YES CLOSERULE FLIP CONTROL CARDS

WITH T1 ( IXNAME

, DBNAME

, OBID)

AS ( SELECT STRIP(IX.CREATOR) CONCAT '.' CONCAT STRIP(IX.NAME)

, TS.DBNAME

, IX.OBID

FROM SYSIBM.SYSINDEXES IX,

SYSIBM.SYSTABLES TB,

SYSIBM.SYSTABLESPACE TS

WHERE TB.NAME = IX.TBNAME

AND TB.CREATOR = IX.TBCREATOR

AND TB.TSNAME = TS.NAME

AND TB.DBNAME = TS.DBNAME

AND (TS.PARTITIONS > 254

OR TS.TYPE = 'L'

OR TS.TYPE = 'K'

OR TS.DSSIZE > 0)

AND TS.PARTITIONS <> 0

AND (TS.STATUS = 'C'

OR NOT ((IX.INDEXTYPE = 'P'

OR IX.INDEXTYPE = 'D')

AND TB.PARTKEYCOLNUM <> 0))

AND TS.DBNAME <> 'DSNDB06'

AND IX.CLOSERULE = 'N'

ORDER BY TS.DBNAME , HEX(IX.OBID)

)

SELECT CAST(CASE NUMBER

WHEN 1 THEN 'ALTER INDEX'

WHEN 2 THEN IXNAME

WHEN 3 THEN 'CLOSE YES

Which generates the first set of flip-flops from YES -> NO:

ALTER INDEX

D9999TOP.BADBIGIX

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGIX

CLOSE NO $

ALTER INDEX

D9999TOP.BADBIGIB

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGIB

CLOSE NO $

And then the next flip-flop:

-- CREATE DRIVER TABLE FOR CARTESIAN JOIN PROCESSING

DECLARE GLOBAL TEMPORARY TABLE DRIVER (NUMBER SMALLINT) ;

INSERT INTO SESSION.DRIVER VALUES 1 ;

INSERT INTO SESSION.DRIVER VALUES 2 ;

INSERT INTO SESSION.DRIVER VALUES 3 ;

INSERT INTO SESSION.DRIVER VALUES 4 ;

INSERT INTO SESSION.DRIVER VALUES 5 ;

INSERT INTO SESSION.DRIVER VALUES 6 ;

-- YES -> NO CLOSERULE FLIP CONTROL CARDS

WITH T1 ( IXNAME

, DBNAME

, OBID)

AS ( SELECT STRIP(IX.CREATOR) CONCAT '.' CONCAT STRIP(IX.NAME)

, TS.DBNAME

, IX.OBID

FROM SYSIBM.SYSINDEXES IX,

SYSIBM.SYSTABLES TB,

SYSIBM.SYSTABLESPACE TS

WHERE TB.NAME = IX.TBNAME

AND TB.CREATOR = IX.TBCREATOR

AND TB.TSNAME = TS.NAME

AND TB.DBNAME = TS.DBNAME

AND (TS.PARTITIONS > 254

OR TS.TYPE = 'L'

OR TS.TYPE = 'K'

OR TS.DSSIZE > 0)

AND TS.PARTITIONS <> 0

AND (TS.STATUS = 'C'

OR NOT ((IX.INDEXTYPE = 'P'

OR IX.INDEXTYPE = 'D')

AND TB.PARTKEYCOLNUM <> 0))

AND TS.DBNAME <> 'DSNDB06'

AND IX.CLOSERULE = 'Y'

ORDER BY TS.DBNAME , HEX(IX.OBID)

)

SELECT CAST(CASE NUMBER

WHEN 1 THEN 'ALTER INDEX'

WHEN 2 THEN IXNAME

WHEN 3 THEN 'CLOSE NO

Which generates NO -> YES format:

ALTER INDEX

USER001.RESB~0

CLOSE NO $

ALTER INDEX

USER001.RESB~0

CLOSE YES $

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Began checking at: 2014-02-11-12.34.08.752641

Database A140XO82 started.

Database A140XO82 ended.

Database ANKDB1 started.

Database ANKDB1 ended.

Database ANKDB3 started.

Database ANKDB3 ended.

Database D9999TOP started.

OBID X'0004' is X'0100' rebuilt X'1000'

OBID X'0006' is X'0100' rebuilt X'1000'

OBID X'0008' is X'0100' rebuilt X'1000'

OBID X'000A' is X'0100' rebuilt X'1000'

OBID X'000C' is X'0100' rebuilt X'1000'

OBID X'000E' is X'0100' rebuilt X'1000'

OBID X'0010' is X'0100' rebuilt X'1000'

Database D9999TOP ended.

Database DATAPBG2 started.

Database DATAPBG2 ended.

.

.

.

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

AND TB.DBNAME = 'D9999TOP'

AND HEX(IX.OBID) IN (

'0004'

, '0006'

, '0008'

, '000A'

, '000C'

, '000E'

, '0010'

)

I get the following output:

ALTER INDEX

D9999TOP.BADBIGIA

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGIA

CLOSE NO $

ALTER INDEX

D9999TOP.BADBIGIB

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGIB

CLOSE NO $

ALTER INDEX

D9999TOP.BADBIGIC

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGIC

CLOSE NO $

ALTER INDEX

D9999TOP.BADBIGID

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGID

CLOSE NO $

ALTER INDEX

D9999TOP.BADBIGIE

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGIE

CLOSE NO $

ALTER INDEX

D9999TOP.BADBIGIF

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGIF

CLOSE NO $

ALTER INDEX

D9999TOP.BADBIGIG

CLOSE YES $

ALTER INDEX

D9999TOP.BADBIGIG

CLOSE NO $

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect

WHEN 4 THEN 'ALTER INDEX' WHEN 5 THEN IXNAME WHEN 6 THEN 'CLOSE NO

Which generates the first set of flip-flops from YES -> NO:

And then the next flip-flop:

Which generates NO -> YES format:

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

I get the following output:

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect

END AS CHAR(72)) FROM T1, SESSION.DRIVER ORDER BY DBNAME, OBID, NUMBER ;

Which generates the first set of flip-flops from YES -> NO:

And then the next flip-flop:

Which generates NO -> YES format:

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

I get the following output:

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect

WHEN 4 THEN 'ALTER INDEX' WHEN 5 THEN IXNAME WHEN 6 THEN 'CLOSE YES

Which generates NO -> YES format:

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

I get the following output:

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect

WHEN 4 THEN 'ALTER INDEX' WHEN 5 THEN IXNAME WHEN 6 THEN 'CLOSE NO

Which generates the first set of flip-flops from YES -> NO:

And then the next flip-flop:

Which generates NO -> YES format:

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

I get the following output:

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect

END AS CHAR(72)) FROM T1, SESSION.DRIVER ORDER BY DBNAME, OBID, NUMBER ;

Which generates the first set of flip-flops from YES -> NO:

And then the next flip-flop:

Which generates NO -> YES format:

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

I get the following output:

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect

END AS CHAR(72)) FROM T1, SESSION.DRIVER ORDER BY DBNAME, OBID, NUMBER ;

Which generates NO -> YES format:

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

I get the following output:

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect

WHEN 4 THEN ‘ALTER INDEX’ WHEN 5 THEN IXNAME WHEN 6 THEN ‘CLOSE NO

Which generates the first set of flip-flops from YES -> NO:

And then the next flip-flop:

Which generates NO -> YES format:

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

I get the following output:

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect

END AS CHAR(72)) FROM T1, SESSION.DRIVER ORDER BY DBNAME, OBID, NUMBER ;

Which generates the first set of flip-flops from YES -> NO:

And then the next flip-flop:

Which generates NO -> YES format:

Top of page

Note that the above ALTERs use a $ as terminator, so you must either use a C ALL $ ; style ISPF command or just use a –#SET TERMINATOR $ line in the SPUFI.

Automatic parse of the millions of lines of REPAIR output and *only* outputs OBIDs

At this point, when even I had a HUGE list of candidates, I decided to automate it even further so I wrote a little REXX that parses the millions of lines of REPAIR output and *only* outputs OBIDs for real bad guys. That way you can see what you really have to do very quickly. Here’s how my output looks:

Here you can see that my first actual problem database is the D9999TOP. When I then plug that into my flip flop generator by using cut-and-paste, for both cases, like this:

I get the following output:

Top of page

Reach your real maximum number of pieces!

There was no output from the other query, so once these ALTERs ran my work on this database was done and I could happily move onto the next.

This is much quicker than searching through REPAIR sysout! The REXX should be copied to a PROC library and called NPI. Then using the JCL (which must tailored for your site, of course!) to execute it – and Bob’s your uncle!

Once all of the ALTERs have been executed then, finally, all of your NPIs can actually reach their real maximum number of pieces!

This month I also used (stole!) the neat Cartesian Join idea from Marcus Davage’s blog: http://spufidoo.wordpress.com/2013/02/28/judicious-cartesian-joins/

that was posted on Listserv and everyone loved it instantly!

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect